The AI Wave: Will AI Ever Know What It Means to Be Human?

Rahman Hanafi

In the second chapter of this series, I argued that AI doesn’t feel, it doesn’t truly create; it only generates. But what if I was asking the wrong question?

In this final chapter, I want to explore the other side of the argument. Could AI ever know what it means to be human? And if AI ever does wake up, what would that mean for us?

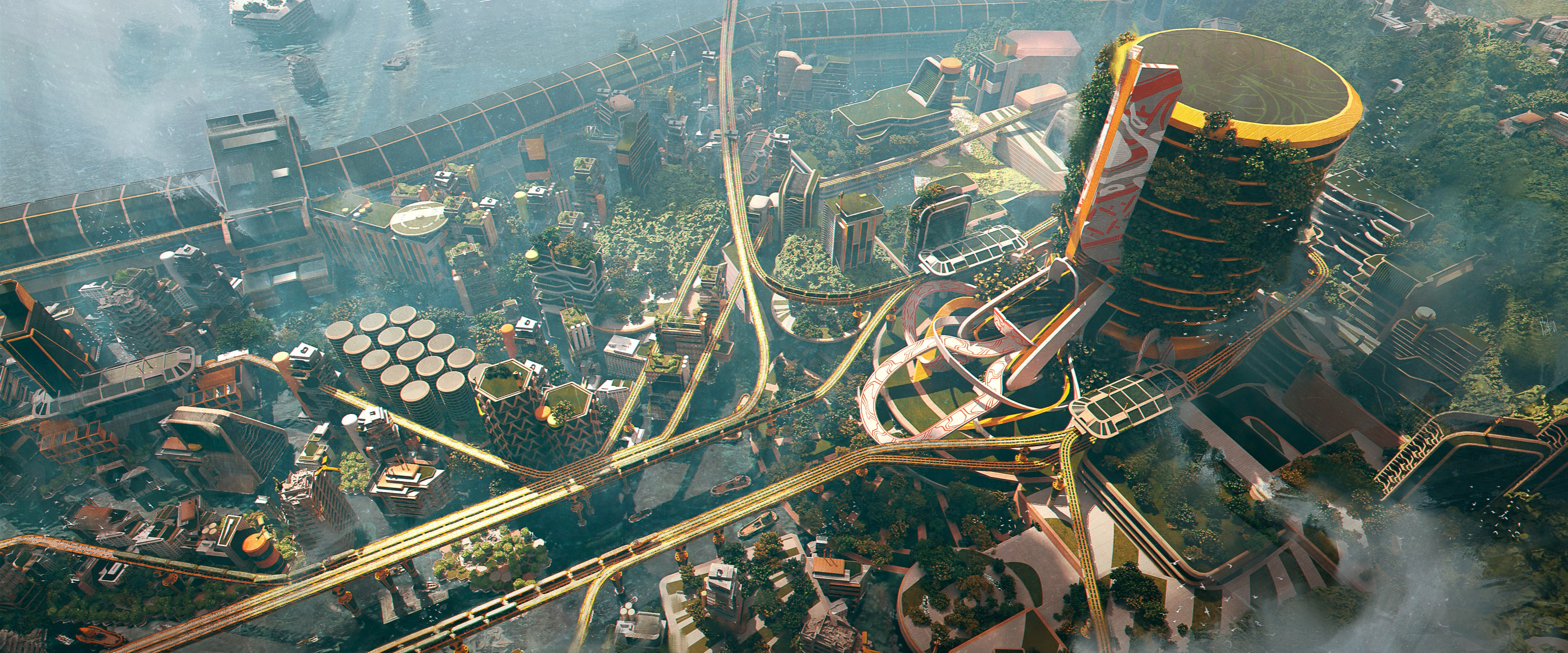

Artwork by Felix Riaño - Waterlogged City

The AI Wave: Will AI Ever Know What It Means to Be Human?

I've been thinking a lot about consciousness lately. Not just the philosophical "I think, therefore I am," but the messy, complicated, beautifully flawed experience of being human. As AI systems become increasingly sophisticated, they're raising profound questions about the nature of consciousness and what it truly means to be human.

When I interact with AI, I'm often struck by moments of cognitive dissonance. The responses can be impressively articulate, even seemingly empathetic, but something's always missing. While AI can process information at remarkable speeds, with models handling billions of parameters, they fundamentally lack what neuroscientists call "qualia," or the subjective, inner experience of consciousness. They can describe the color red, but they don't see red.

And yet, some argue that these distinctions are becoming harder to make. AI's ability to convincingly simulate emotion through language is growing at a rapid pace. It can write a moving tribute or a heartbreaking story so well that it feels as if it understands. But this is the key difference: simulation is not lived experience.

Neuroscientific research has demonstrated that human consciousness cannot be separated from emotional experience and bodily sensation. In a paper by Damasio and Damasio (2022), they argue that "homeostatic feelings" are central to the biology of consciousness. Our decisions, even the most logical ones, are deeply tied to emotional processing in our brains, which are a form of bodily regulation (Caporale et al., 2021). AI, in contrast, operates purely on pattern recognition and statistical prediction.

Here's what fascinates me: an AI can describe grief, but it will never feel the hollow ache in its chest when remembering a lost loved one. It can analyze love poems throughout history, but it will never experience the flutter of butterflies in its stomach when seeing someone special walk into a room. These emotions aren't just extra features; they are fundamental to how we process and understand the world.

Recent research in affective neuroscience has shown that emotions create physical changes in our neural pathways, literally reshaping how we think and perceive. As Caporale, Perugini, and Zucchelli (2021) explored in their paper, this interplay of emotional experience and behavior forms a feedback loop between our body and our mind, a loop that no AI system, no matter how advanced, can currently replicate.

Perhaps we're asking the wrong question when we wonder if AI will ever be conscious. Consciousness might not be a simple on-off switch. In their Integrated Information Theory, Tononi, Boly, Massimini, and Koch (2014) suggest consciousness might exist on a spectrum, which is a compelling way to frame the issue. But here's the thing: even if AI develops some form of consciousness, it would likely be radically different from human consciousness. Our awareness is shaped by millions of years of evolution, by the experience of having a body, and by the complex interplay of hormones and neurons that create our emotional landscape.

What's particularly fascinating is how our interactions with AI are teaching us more about ourselves. As a recent review by Kaur and Gupta (2024) found, our tendency to project human traits onto AI, a phenomenon known as anthropomorphism, can greatly increase user engagement and trust. This is something we're wired to do. Yet, as scholars like Salles, Evers, and Farisco (2020) have noted, this anthropomorphism is often a "fallacy" that distorts our judgment and can even permeate the language used within AI research itself.

This projection reveals something profound about human consciousness: our desperate need for connection, our ability to find meaning in interaction, and our tendency to see ourselves reflected in others. These very human traits, our capacity for empathy, our search for meaning, and our ability to project consciousness, might be what makes us uniquely human.

The Future of Consciousness

The question of whether AI will ever truly understand what it means to be human might be less important than what our pursuit of artificial consciousness reveals about ourselves. As we build these systems that mimic human thought and behavior, we're forced to grapple with fundamental questions about consciousness, experience, and meaning.

This leads to what philosopher David Chalmers calls the "hard problem" of consciousness, which he first articulated in his seminal 1995 paper "Facing Up to the Problem of Consciousness" (Chalmers, 1995). It is the gap between observable behavior and subjective experience. An AI can say "I'm sad" in a perfectly convincing way, but how do we know if it's truly feeling sadness, or simply generating the most statistically probable response based on its training data? This is a gap we may never be able to cross.

What makes us human isn't just our ability to think or process information; it's our capacity to feel deeply, to create meaning from experience, to be moved by beauty and crushed by loss. It's the way a song can transport us back in time, how a certain smell can unlock a flood of memories, and how a single touch can communicate more than a thousand words.

These experiences aren't just data points to be processed, they're the raw material of human consciousness, shaped by our bodies, our emotions, and our connections with others. AI might eventually simulate these experiences with perfect accuracy, but as we've explored, simulation isn't the same as lived experience.

The Human Paradox

Perhaps what makes us most human is our ability to question our own humanity, to wonder about consciousness while experiencing it, and to seek understanding while being shaped by that very search. It's a beautiful paradox, one that AI might help us understand better, even if it never experiences it itself.

After all, maybe the most human thing we can do is keep asking these questions, knowing that the answers might always be just beyond our reach. And last but not least, if somehow AI ever truly wakes up, the biggest question won’t be what it is - it will be who we become in response.

References

Caporale, C., Perugini, M., & Zucchelli, L. (2021). Neural pathways underlying the interplay between emotional experience and behavior, from old theories to modern insight. Open Journal of Medical & Health Sciences, 5(3), 11-19.

Chalmers, D. J. (1995). Facing up to the problem of consciousness. Journal of Consciousness Studies, 2(3), 200–219.

Damasio, A., & Damasio, H. (2022). Homeostatic feelings and the biology of consciousness. Brain: A Journal of Neurology, 145(6), 1989-1996.

Kaur, T., & Gupta, V. (2024). AI Anthropomorphism: Effects on AI-Human and Human-Human Interactions. International Journal of Emerging Technologies and Innovative Research (IJETIR), 11(10), f1-f8.

Salles, A., Evers, K., & Farisco, M. (2020). Anthropomorphism in AI. AJOB Neuroscience, 11(2), 88-95.

Tononi, G., Boly, M., Massimini, M., & Koch, C. (2014). Integrated information theory: from consciousness to its physical substrate. Nature Reviews Neuroscience, 15(7), 473–481.